In 2014 I designed & built a 3-node GlusterFS cluster. GlusterFS is Red Hat’s distributed filesystem, and it can provide a storage solution that scales well for some purposes.

Each of the three server nodes was built as follows:

- 2U SuperMicro enclosure, providing 12×3.5″ disks at the front, & 2×2.5″ internal disks for the OS

- SuperMicro X10SLM-F motherboard, Intel Xeon Processor

- 32GB RAM

- High-performance LSI 9280-4i4e SAS controller

- Intel 10GbE NIC

- 2xIntel SSDs for the OS

- 12x4TB Hitachi disks, for their high reliability, and low cost.

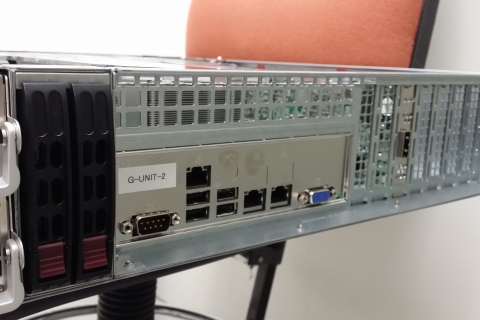

The boards didn’t have many slots, but enough for RAID controller, 10GbE, and a SAS HBA:

A 4U SuperMicro enclosure provided a further 45×3.5″ disk bays – 24 disk bays accessible from the front, and 21 disk bays accessible from the back. This 4U 45-disk enclosure was attached to the first of the 3 server nodes.

The disks were grouped into 12-disk RAID6 volumes, each providing 40 TB usable storage. The RAID volumes were formatted with XFS & then added to the GlusterFS cluster as “bricks”. GlusterFS joins the bricks together to provide a filesystem which spans all the bricks, and which can be easily expanded by simply adding new bricks.

With each 45-disk enclosure providing 180 TB of raw disk, provisioned as 148 TB of RAID6, divided into 10TB bricks, the last 8 TB in each enclosure was not enough to form a brick. So this was leftover as a small test/scratch volume, not part of the GlusterFS. Each 45-bay enclosure thus provides 140 TB of storage to the GlusterFS (minus a small amount for the XFS filesystem overhead).

In early 2016 we purchased a second 45-bay enclosure, and by the time I left Fugro, we’d filled this enclosure with disks. This 2nd 4U enclosure was of course attached to the second node, leaving the third node ready to take a 3rd 4U enclosure when required.

The second 45-disk enclosure brought the cluster’s usable storage capacity to 400 TB (140TB x 2 + 40TB x 3), and when the third enclosure was added after I left, that will have taken the 3-node cluster to 540 TB, or half a PetaByte.

If (or when) the cluster needs to be expanded further, there are a variety of options. Add more nodes, or expand the 3 existing nodes with one or more additional 45-bay enclosures. Additional enclosures can be easily attached to the existing nodes by adding another controller to each node, or by daisy-chaining the second enclosure from the output SAS port on the first enclossure. Another option at some point would be to start using higher capacity disks, progressively retiring the oldest 4TB drives as they are replaced with larger ones.

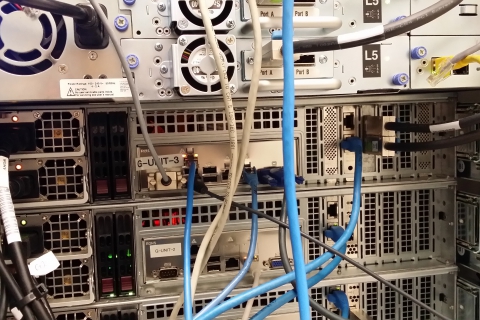

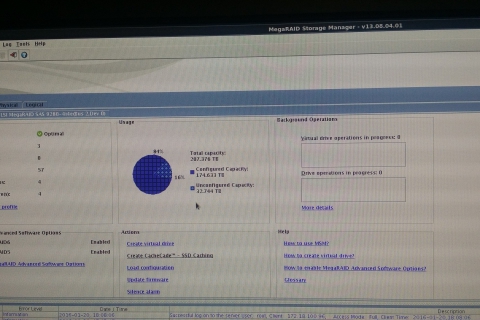

Here are a couple of shots of the cluster in 2015, before we attached the second 4U 45-disk enclosure, plus a shot of the LSI MegaRAID manager, showing 57 disks & 207 TiB attached to the 1st node in the cluster:

Note: the 207 TB (Terabytes) of raw disk space reported by the MegaRaid storage manager is actually 207 TiB (Tibibytes). LSI have been a bit sloppy there. This is the 45 disks in the enclosure plus the 12 disks in the node, ie 180 TB (enclosure) + 48 TB (node) = 228 Terabytes (TB) = 207 Tebibytes (TiB).